Proactive Fault Management in the Cloud

Redundancy is a fundamental concept in dependable computing which is applied to servers, software components, processors, storage, etc. There are two types of redundancy: redundancy in time and in space. With redundancy in time operations are repeated several times whereas redundancy in space relies on redundant hardware/software. Not surprisingly, both types of redundancy come at certain cost: Redundancy in time in general lowers performance while redundancy in space increases expenses on hardware and/or storage, since resource capacities have to be designed for at least triple the amount necessary to handle peak loads - and until very recently such configuration had to be operated 24/7. Cloud computing has the potential to change this situation dramatically, since it first lowers cost for redundant resources, second, offers to adjust resources dynamically, and third, allows to temporarily use a huge amount of resources.

Amazon's infrastructure already offers solid dependability at the level of Elastic Compute Cloud (EC2) instances. We want to go one step further: we focus on application level and performance failures and use EC2's features to dynamically adjust computing resources as a countermeasure to such failures. More specifically, we investigate prediction techniques to anticipate critical situations with the goal to avoid them, i.e., even before they become reality, or at least to minimize their negative effects. Such approach is called Proactive Fault Management (PFM).

We address the following research questions:

-

What are promising redundancy management strategies? Is it better to invoke multiple small computing instances or one big instance?

-

What is the performance overhead for each option?

-

What are the requirements for successful failure prediction (e.g., lead-time, false positive rates, etc.)?

-

How can the cost factors for operation, switching, user-perceived failures, etc. be incorporated into one cost function?

-

What is the quantifiable benefit in terms of total cost of ownership, service availability and reliability?

Experiments Planned

Our experimental scenario will consist of a continuous integration server environment, where developers commit changes to a code repository. Before commit, the source code is automatically compiled in order to check for compilation errors, and tested using test cases. Since there are several teams of developers working concurrently, compilation/testing tasks have to be scheduled on a set of instances such that overall costs are minimal. The challenge is that failures (e.g., completion time above an acceptable threshold) add to the total cost and hence failures have to be anticipated using prediction techniques. Experiments will be performed using logs from commercial continuous integration servers that we have access to as well as publicly available logs from open source projects.

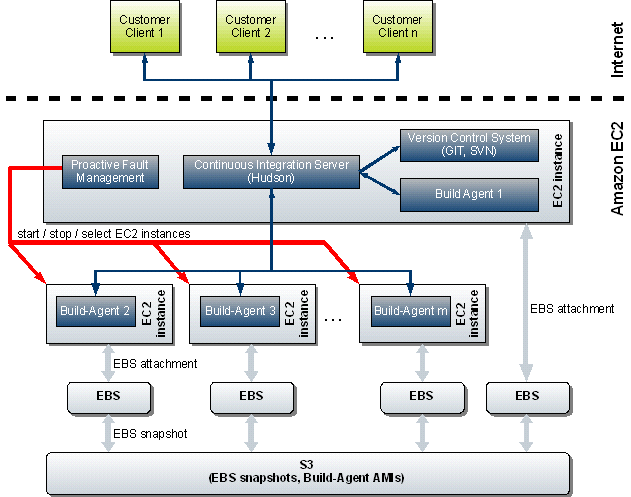

The experimental setup is shown in the following figure:

Customers that use the continuous integration service are depicted in green. Their clients reside outside the Amazon cloud whereas the continous integration service runs entirely within the cloud. We plan to use the Hudson continous integration server, which is an open source software that is also frequently used in commercial environments. In order to manage software versions, the Hudson server relies on backend version control systems such as GIT or SVN. The Hudson server is capable to defer building, i.e., compilation of the sources to multiple so-called "build agents". The first agent resides on the same EC2 instance as the Hudson server. However, in case of high load, additional build agents can be started on additional instances. Each instance is attached to Elastic Block Store (EBS), which uses EBS snapshots to store an up-to-date state in Amazon Simple Storage Service (S3). The decision, how many instances of which type (e.g., "small instance", "medium high CPU instance", etc.) should be used is made by the Proactive Fault Management module, which performs failure prediction in order to find a cost-optimal configuration.

Experiments will be performed in two phases: in the first phase, characteristics such as response time, workload, memory usage, etc. of various configurations will be assessed. In the second phase, our PFM approach will be compared to traditional scenarios with fixed number of instances and predefined instance types.

People Involved

- Dr. Felix Salfner

- René Häusler